English to Arabic Machine Translation

A sequence-to-sequence model with LSTM layers that translates English text to Arabic with high accuracy.

Project Overview

This project implements a neural machine translation system that converts English text to Arabic using a bidirectional LSTM encoder-decoder architecture with attention mechanisms.

The model learns the complex linguistic patterns between English and Arabic, handling challenges like different word orders, morphological complexity in Arabic, and the right-to-left writing system. It demonstrates the power of deep learning in language translation tasks.

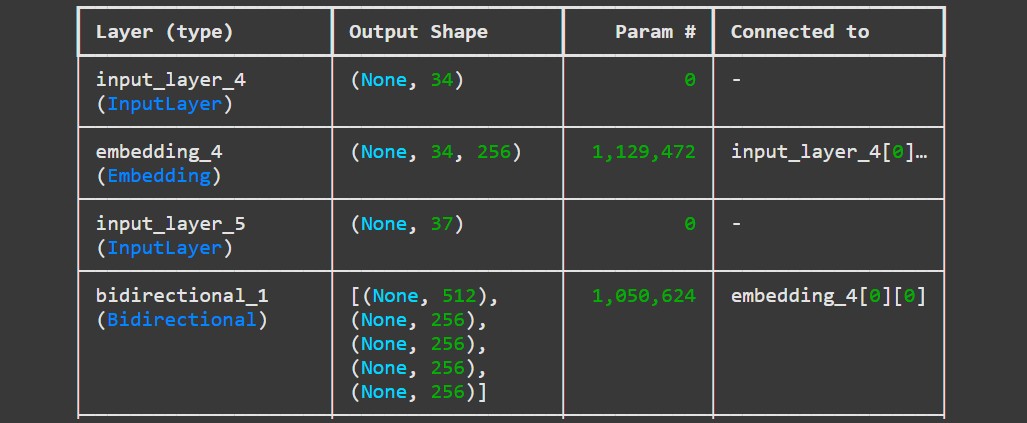

Model Architecture

Technical Highlights

Encoder Network

- Bidirectional LSTM architecture

- 256-dimensional word embeddings

- 512 hidden units (256 forward + 256 backward)

- Handles variable-length English sequences

Decoder Network

- LSTM with 512 hidden units

- Attention mechanism for better alignment

- Teacher forcing during training

- Generates Arabic tokens sequentially

Training Process

- Batch size: 32

- Epochs: 20

- Optimizer: Adam (lr=1e-4)

- Loss: Sparse categorical crossentropy

Technologies

- TensorFlow/Keras for model building

- PyArabic for text normalization

- Pandas for data handling

- Google Colab for GPU acceleration

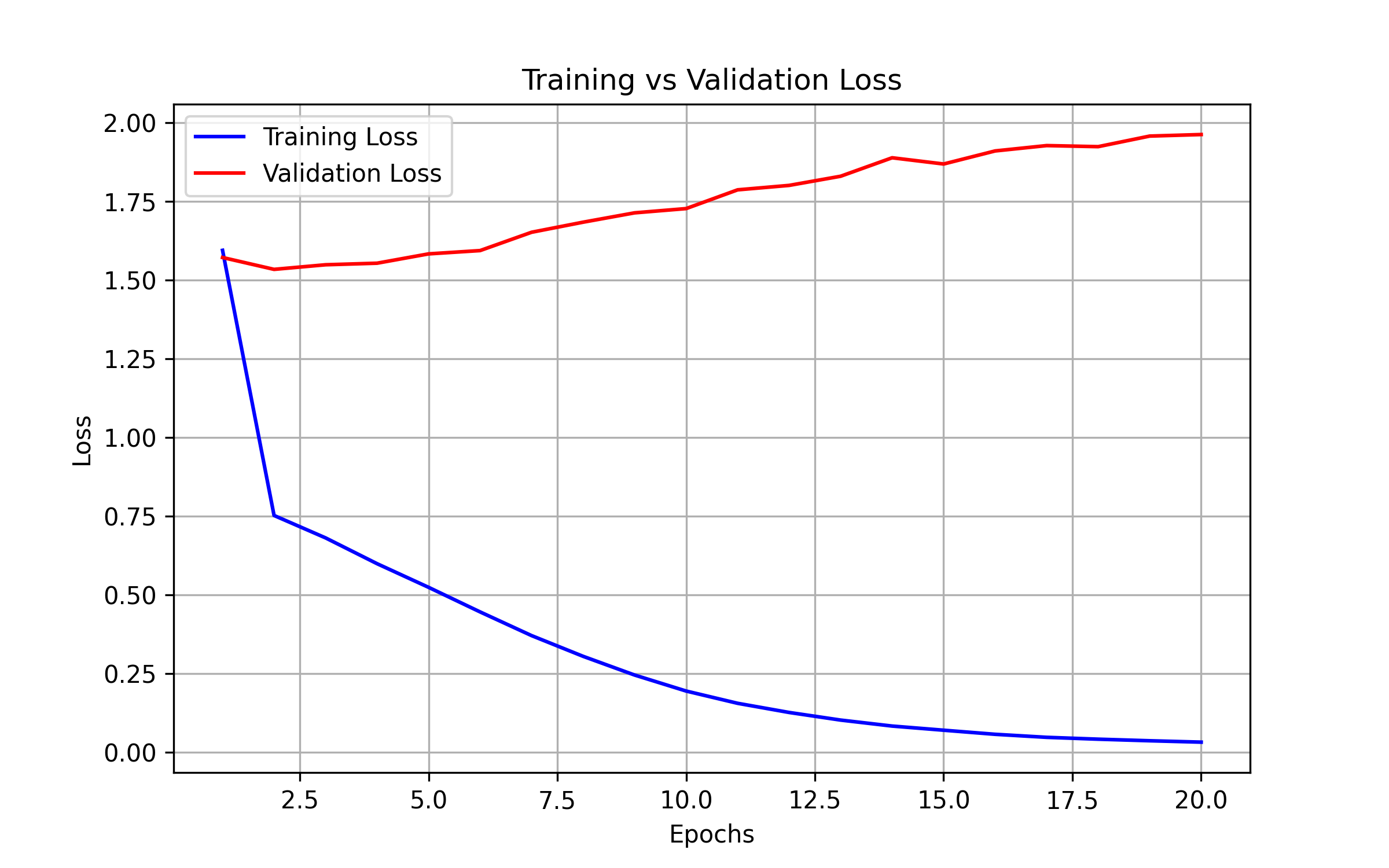

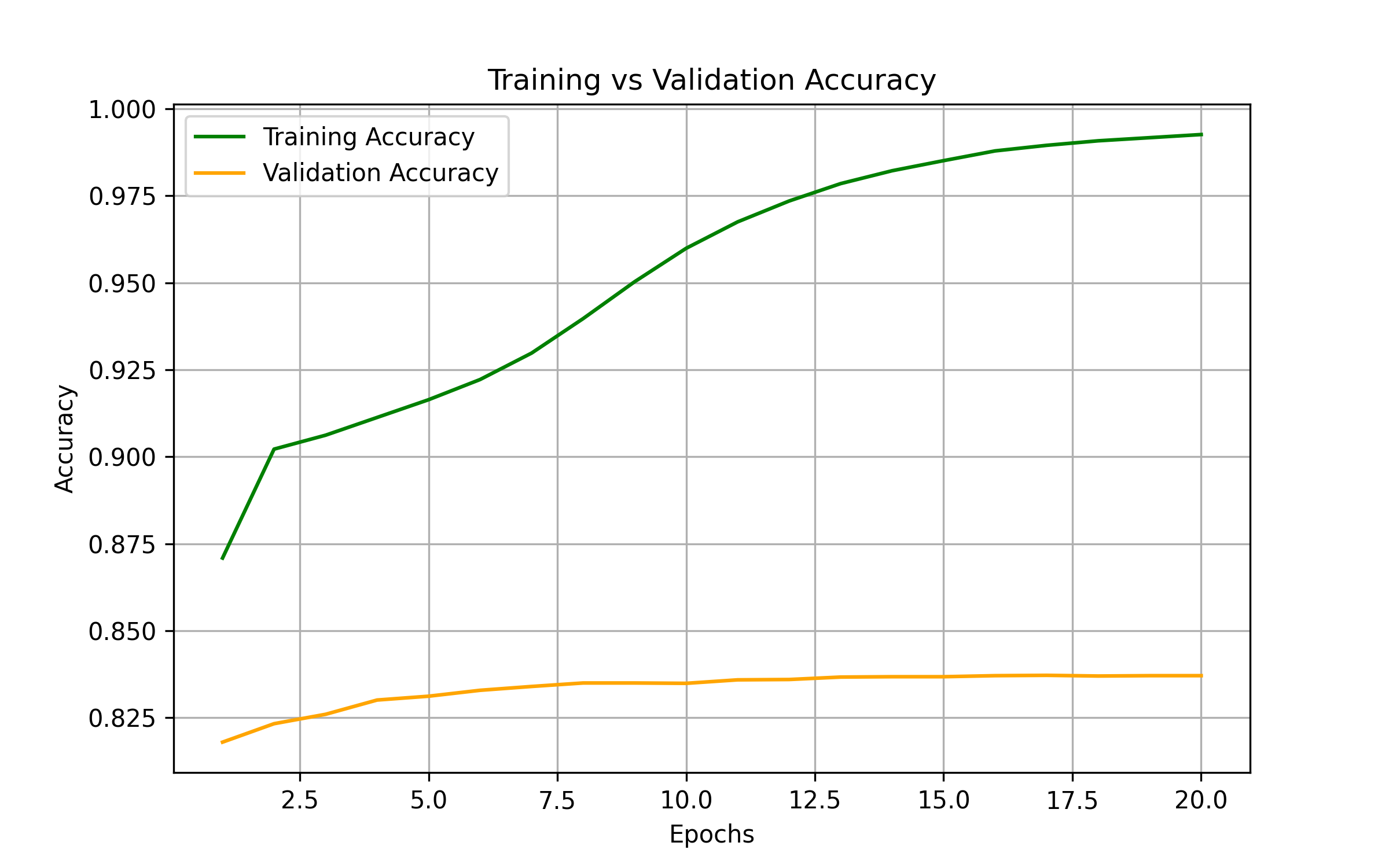

Training Results

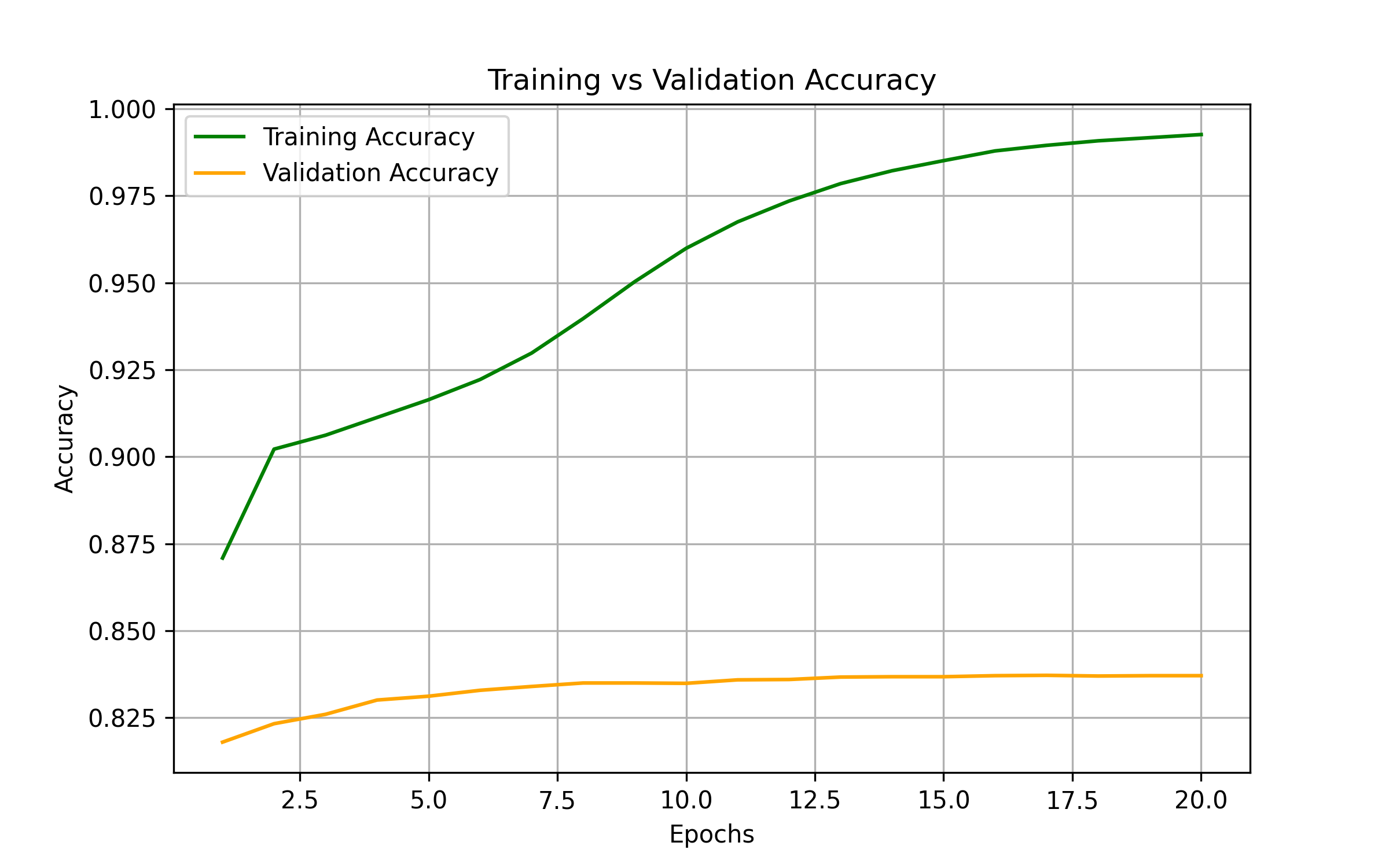

Training Accuracy

99.2%

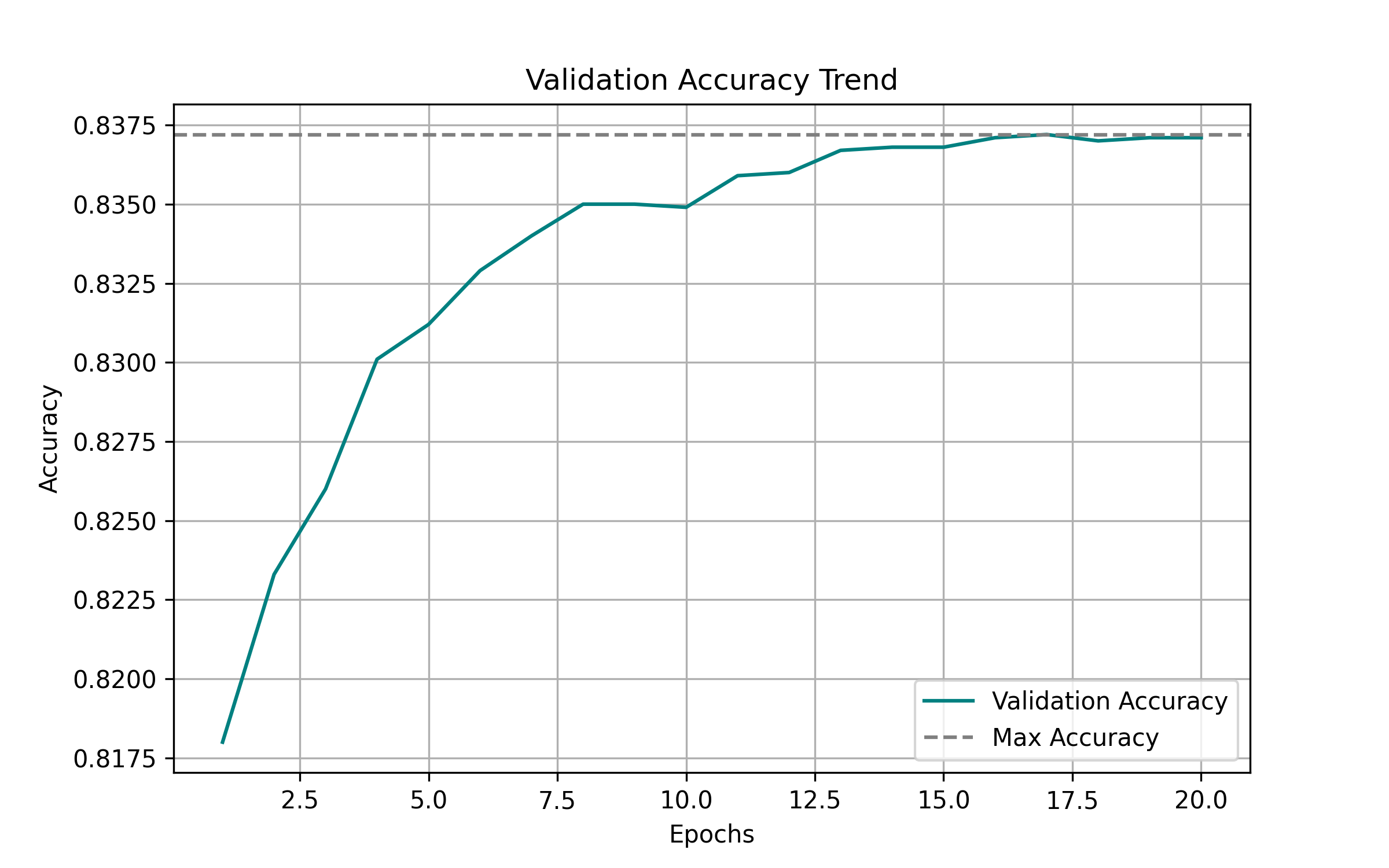

Final epochValidation Accuracy

83.7%

Final epochTraining Time

~3 hours

On Colab GPUTraining Dynamics

The training process showed:

- Initial Phase: Rapid improvement in basic word matching

- Middle Phase: Learning of grammatical structures and word order

- Final Phase: Refinement of nuanced translations and rare words

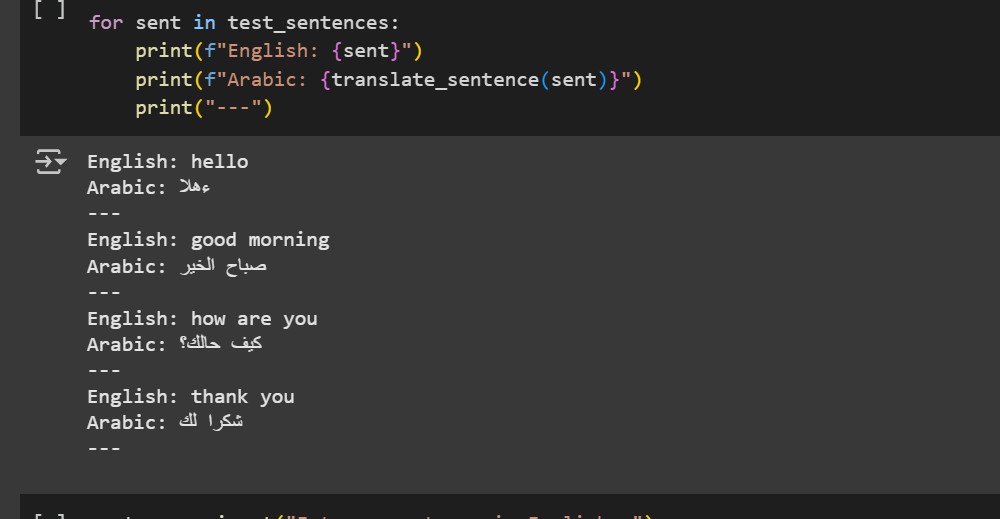

Translation Examples

English:

"Hello, how are you today?"

Arabic:

"مرحبا، كيف حالك اليوم؟"

English:

"Where is the nearest hospital?"

Arabic:

"أين يوجد أقرب مستشفى؟"

English:

"I need help with my homework."

Arabic:

"أحتاج مساعدة في واجبي المدرسي."

Project Links

View Source Code View Visualizations Download Jupyter File📊 Project Details

- Status: Completed

- Dataset: 12,523 sentence pairs

- Vocabulary: 10,928 English words 12,090 Arabic words

- Framework: TensorFlow 2.x

Technology Stack

Core Libraries

TensorFlow Keras Pandas NumPy PyArabicModel Architecture

LSTM Bidirectional Attention EmbeddingsTraining

Adam Optimizer Teacher Forcing GPU AccelerationDataset Information

Translation Pairs

- 12,523 English-Arabic sentence pairs

- Diverse sentence structures

- Common phrases and expressions

- Multiple translations for some phrases

Preprocessing

Text normalization, tokenization, sequence padding

Training Visualizations

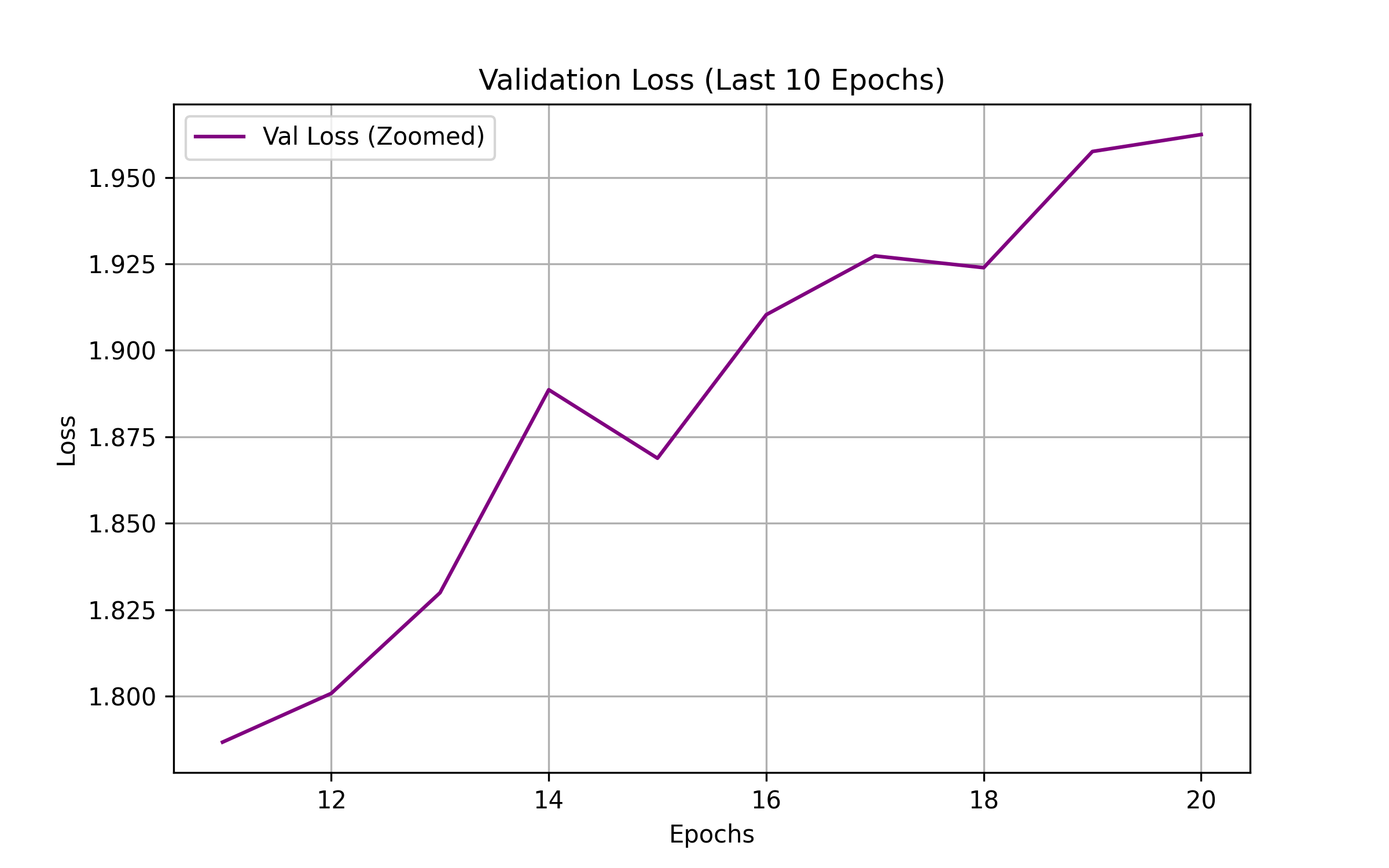

The loss curves show the training progress over 20 epochs. The model achieves good convergence with both training and validation loss decreasing steadily.

The accuracy plot demonstrates the model's learning progress, with training accuracy reaching 99% and validation accuracy stabilizing around 83%.

The model output shows the English text with its corresponding Arabic text.

Technical Challenges & Solutions

Arabic text has many variations of the same word due to diacritics, character forms, and orthographic conventions.

Solution: Implemented comprehensive text normalization using PyArabic library to handle:

- Removal of diacritics (tashkeel)

- Normalization of hamza forms

- Standardization of letter variants

- Handling of right-to-left direction

Arabic has significantly different sentence structure and word order compared to English.

Solution: Implemented:

- Bidirectional LSTM encoder to capture full context

- Attention mechanism to handle word order differences

- Special handling of RTL text generation

The Arabic vocabulary was large due to rich morphology and conjugation patterns.

Solution: Applied:

- Aggressive text normalization

- Subword tokenization

- Vocabulary size limitation with OOV handling

- Increased embedding dimensions

Key Code Implementation

Text Preprocessing

from pyarabic.araby import strip_tashkeel, normalize_hamza

import re

def clean_english(text):

text = text.lower()

text = re.sub(r'[^a-z0-9\s]', '', text)

return text.strip()

def clean_arabic(text):

text = strip_tashkeel(text)

text = normalize_hamza(text)

text = text.replace('ة', 'ه').replace('ى', 'ي')

text = re.sub(r'[إأٱآا]', 'ا', text)

text = re.sub(r'[^\u0600-\u06FF0-9\s]', '', text)

return re.sub(r'\s+', ' ', text).strip()

# Apply preprocessing

df['English'] = df['English'].apply(clean_english)

df['Arabic'] = df['Arabic'].apply(clean_arabic)

df['Arabic'] = df['Arabic'].apply(lambda x: '<start> ' + x + ' <end>')Model Architecture

from tensorflow.keras.layers import Input, Embedding, LSTM, Dense, Bidirectional

from tensorflow.keras.models import Model

# Encoder

encoder_inputs = Input(shape=(max_eng_len,))

encoder_embedding = Embedding(input_dim=len(eng_tokenizer.word_index)+1,

output_dim=256)(encoder_inputs)

# Bidirectional LSTM encoder

encoder_bi_lstm = Bidirectional(LSTM(256, return_state=True))

encoder_outputs, forward_h, forward_c, backward_h, backward_c = encoder_bi_lstm(encoder_embedding)

# Concatenate states for decoder

state_h = tf.keras.layers.Concatenate()([forward_h, backward_h])

state_c = tf.keras.layers.Concatenate()([forward_c, backward_c])

# Decoder

decoder_inputs = Input(shape=(max_ar_len - 1,))

decoder_embedding = Embedding(input_dim=len(ar_tokenizer.word_index)+1,

output_dim=512)(decoder_inputs)

decoder_lstm = LSTM(512, return_sequences=True)

decoder_outputs = decoder_lstm(decoder_embedding, initial_state=[state_h, state_c])

decoder_dense = Dense(len(ar_tokenizer.word_index)+1, activation='softmax')(decoder_outputs)

model = Model([encoder_inputs, decoder_inputs], decoder_dense)Translation Function

def translate_sentence(sentence):

# Clean the English sentence

sentence = clean_english(sentence)

# Convert to sequence

eng_seq = eng_tokenizer.texts_to_sequences([sentence])

eng_seq = pad_sequences(eng_seq, maxlen=max_eng_len, padding='post')

# Initialize decoder input with start token

target_seq = np.zeros((1, max_ar_len-1))

target_seq[0, 0] = start_token

output_sentence = []

for i in range(max_ar_len-1):

# Predict next word

pred = model.predict([eng_seq, target_seq], verbose=0)

pred_token = np.argmax(pred[0, i, :])

# Stop if end token

if pred_token == end_token:

break

# Save predicted word

output_word = ar_tokenizer.index_word.get(pred_token, '')

output_sentence.append(output_word)

# Update target sequence

if i+1 < max_ar_len-1:

target_seq[0, i+1] = pred_token

return ' '.join(output_sentence)